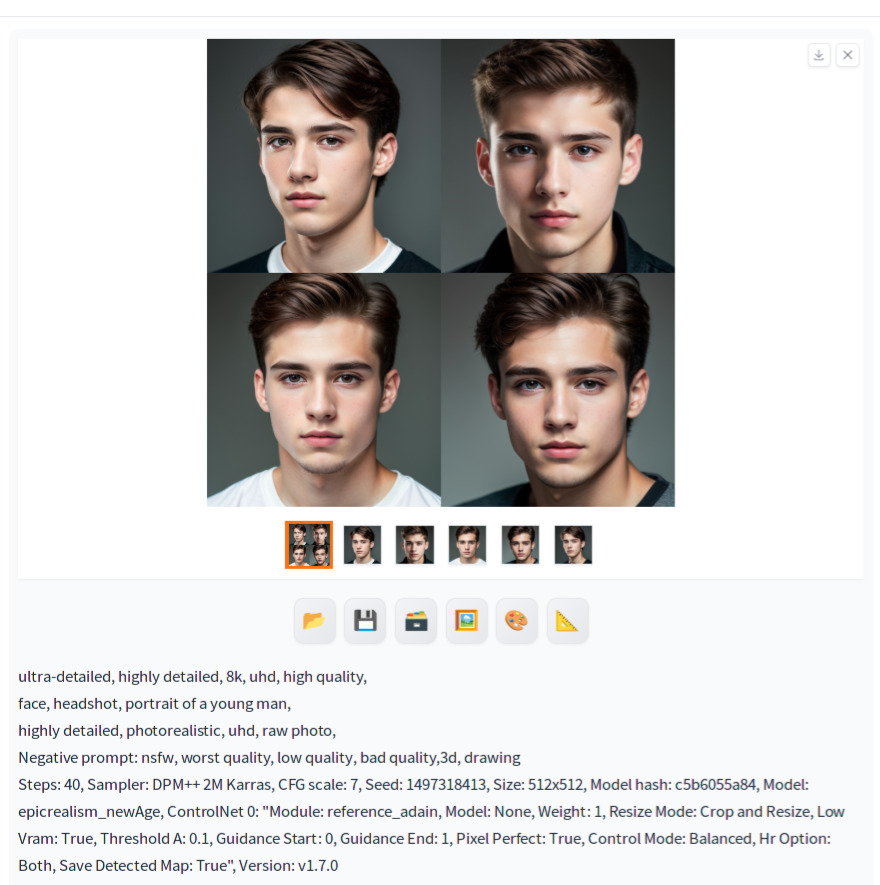

What if you have generated an image and you want to generate very similar images to that one, however you don’t want the changes to be significant? This was a tough problem to solve in Stable Diffusion. For example, let’s see the image below.

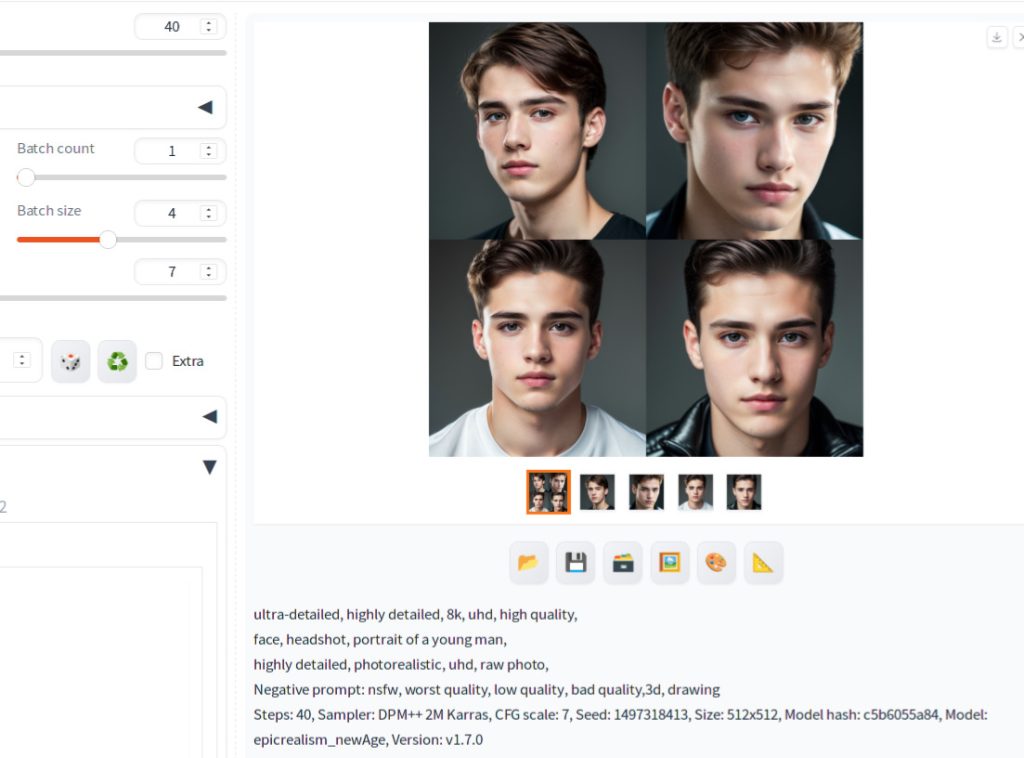

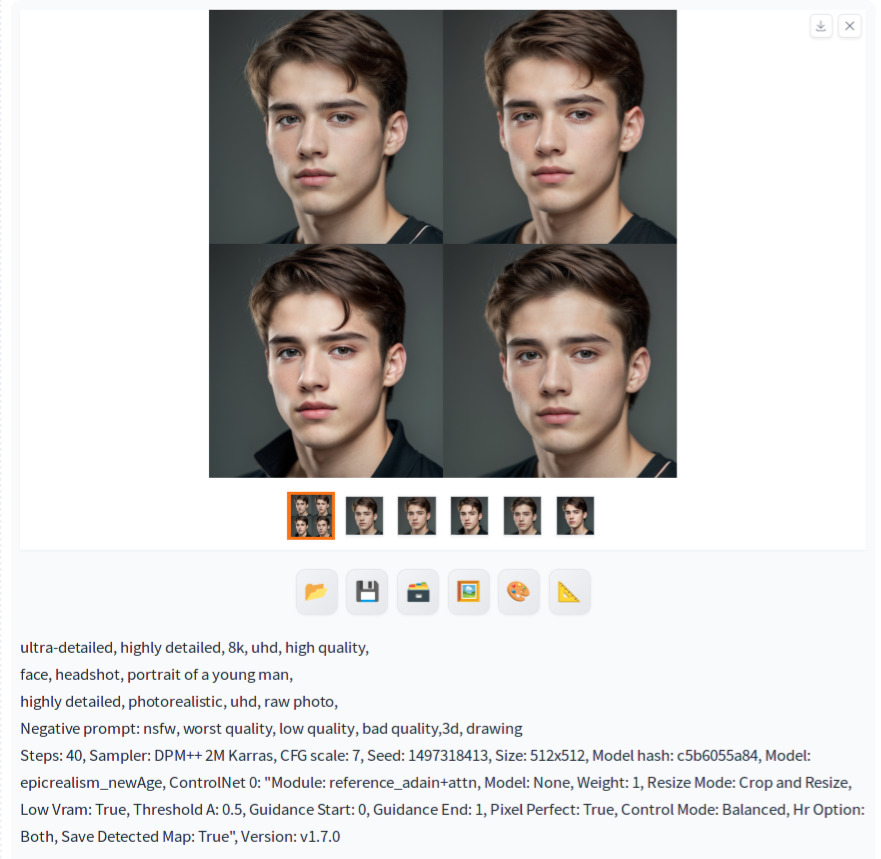

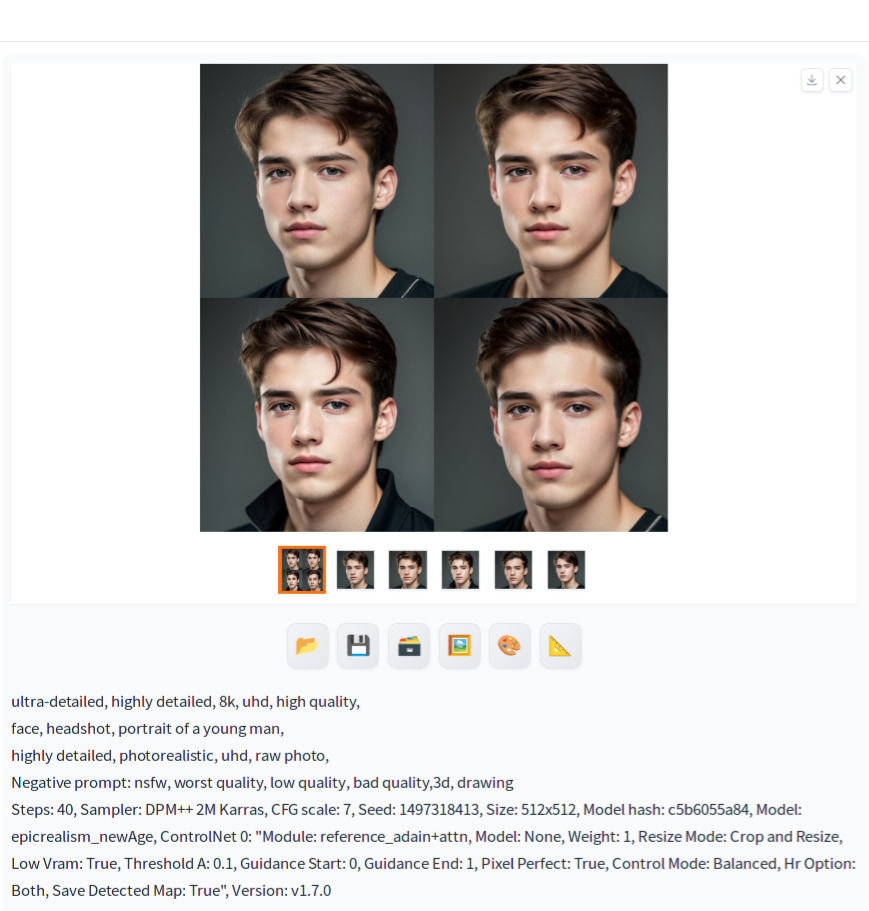

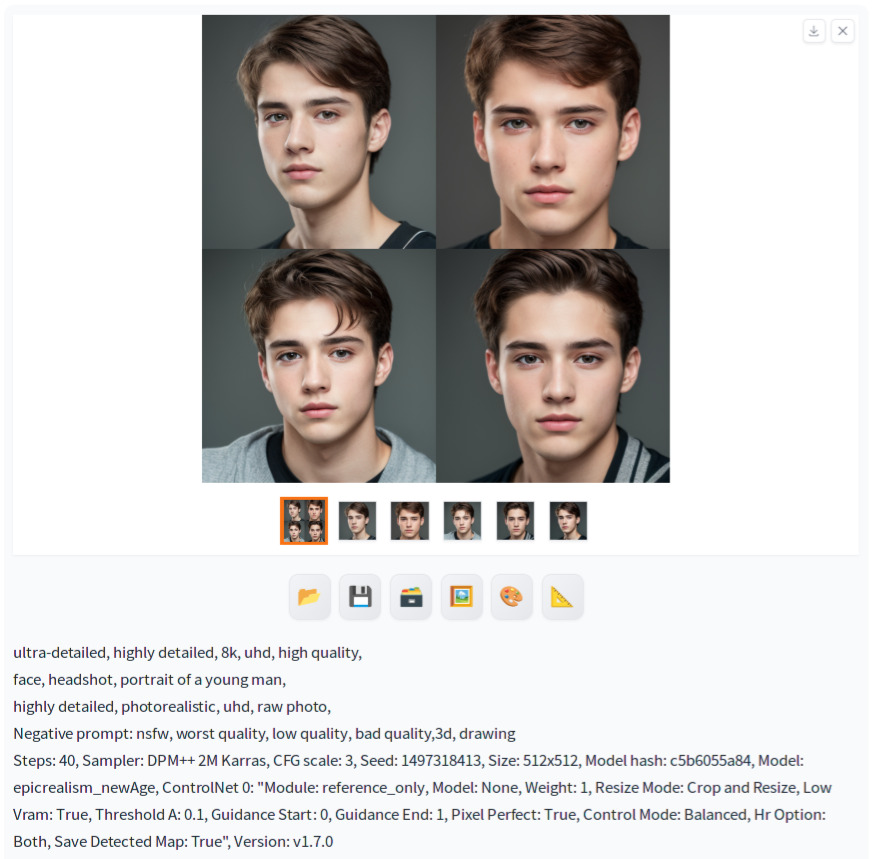

If you try to generate more images by increasing the batch size to 4, you would get something like these:

As you can see, there is some resemblance between images, but image 2, 3 and 4 are significantly different from image 1. To address this issue, ControlNet Reference preprocessor can be used to control the generation of an image to be close to the reference image provided. Let’s dive in.

Installation

Unlike other ControlNet methods, Reference preprocessor does not require a ControlNet model to control the generation. However, you still need to install the ControlNet extension to use this functionality. If you are not sure how to do it, check out our step by step tutorial How to use ControlNet in Automatic1111 Part 2: Installation.

Using Reference Preprocessor

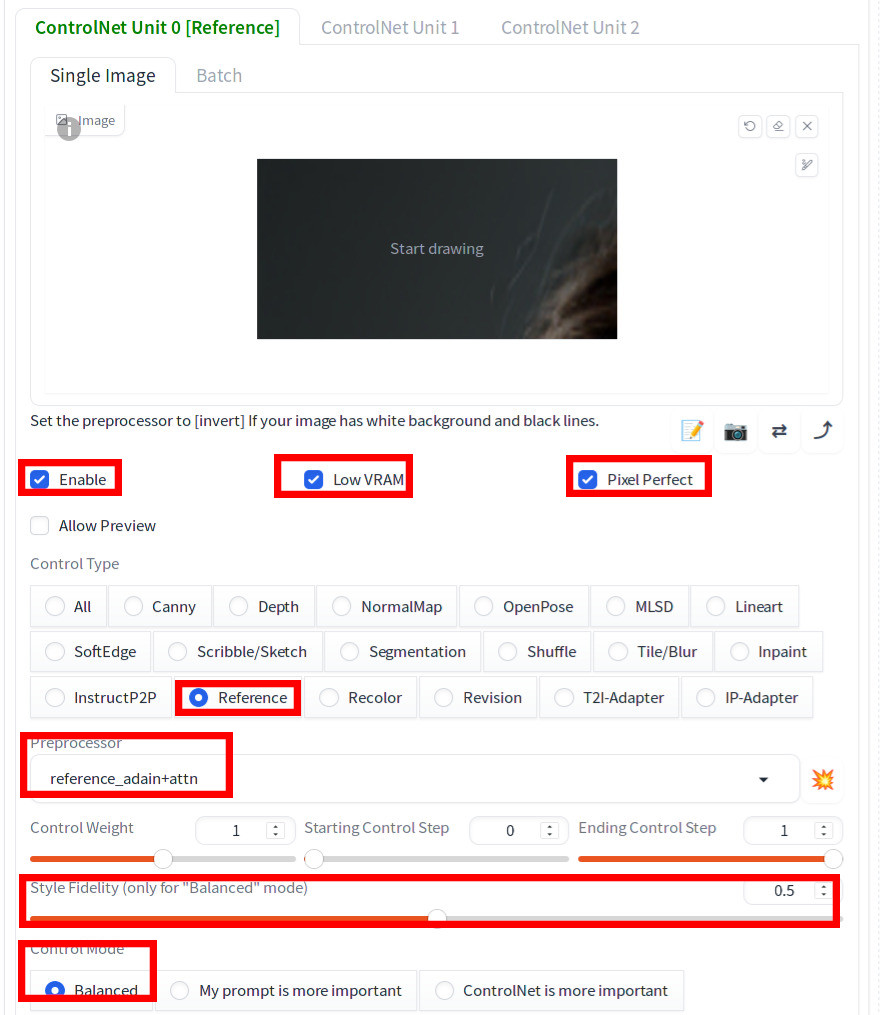

To use Reference preprocessor, go to the txt2img tab, and do the following:

- Drag and drop your reference image into the canvas below

Single Image. After you drag your image, you may not see the image completely as shown below, but it’s OK. - Check

Enable - Check

Low VRAM - Check

Pixel Perfect - In

Control Type,checkReference - In

Preprocessor, selectreference_adain+attn - For

Style Fidelity, select 0.5 - For

Control Mode, selectBalanced

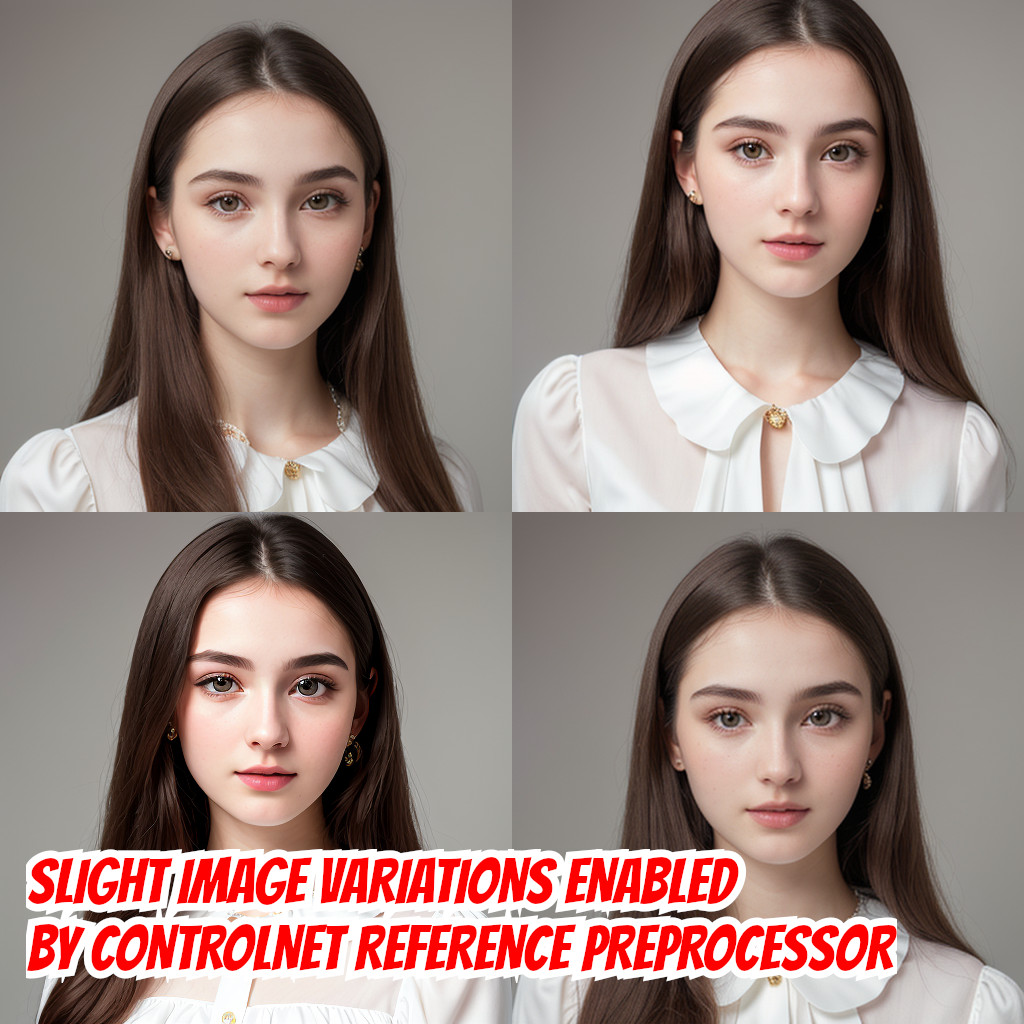

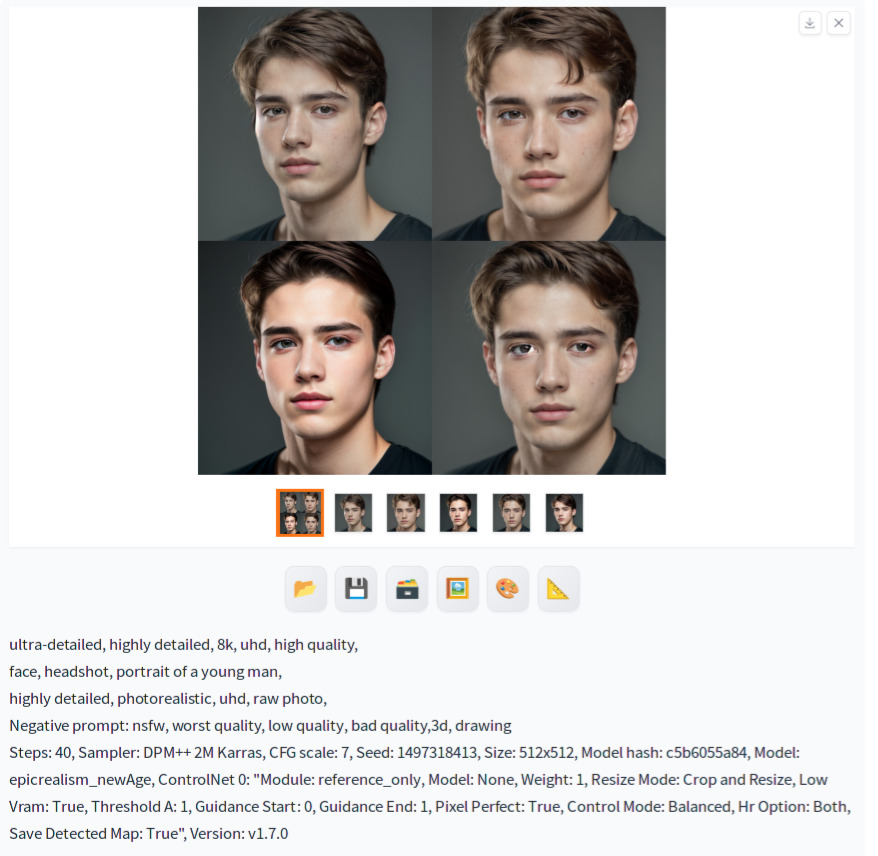

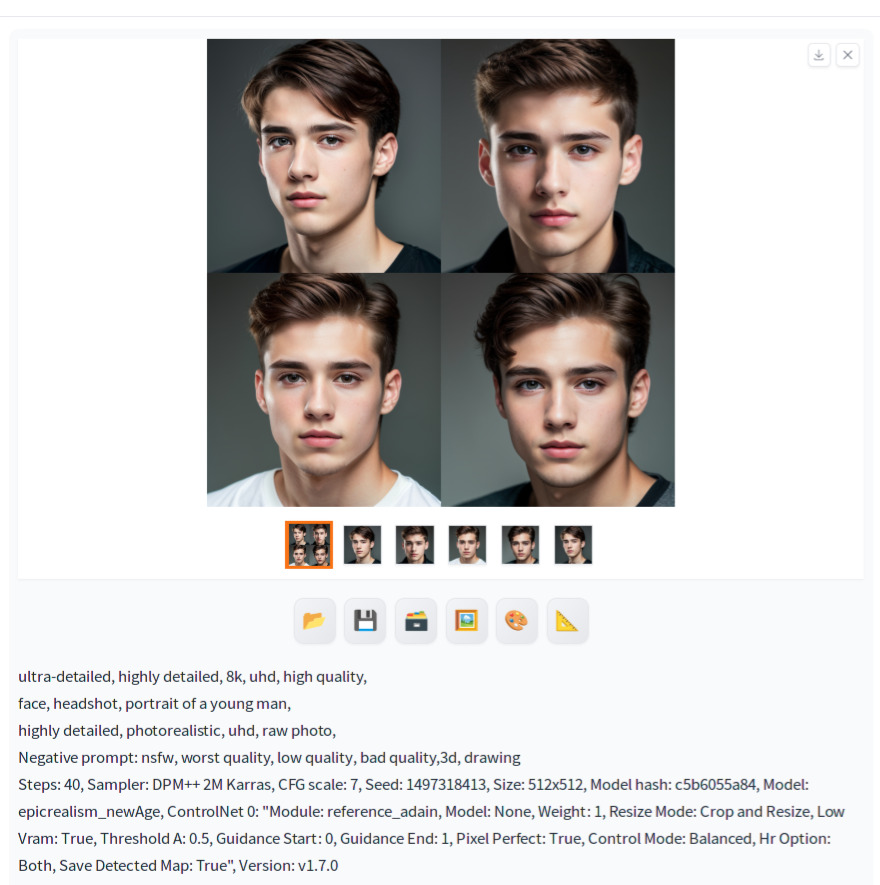

If your PC can handle generating 4 images in a batch, select 4 for the batch size. If you can only generate 1 in a batch, that’ll be fine as well. Now press Generate. You will see that generated images are very similar to each other.

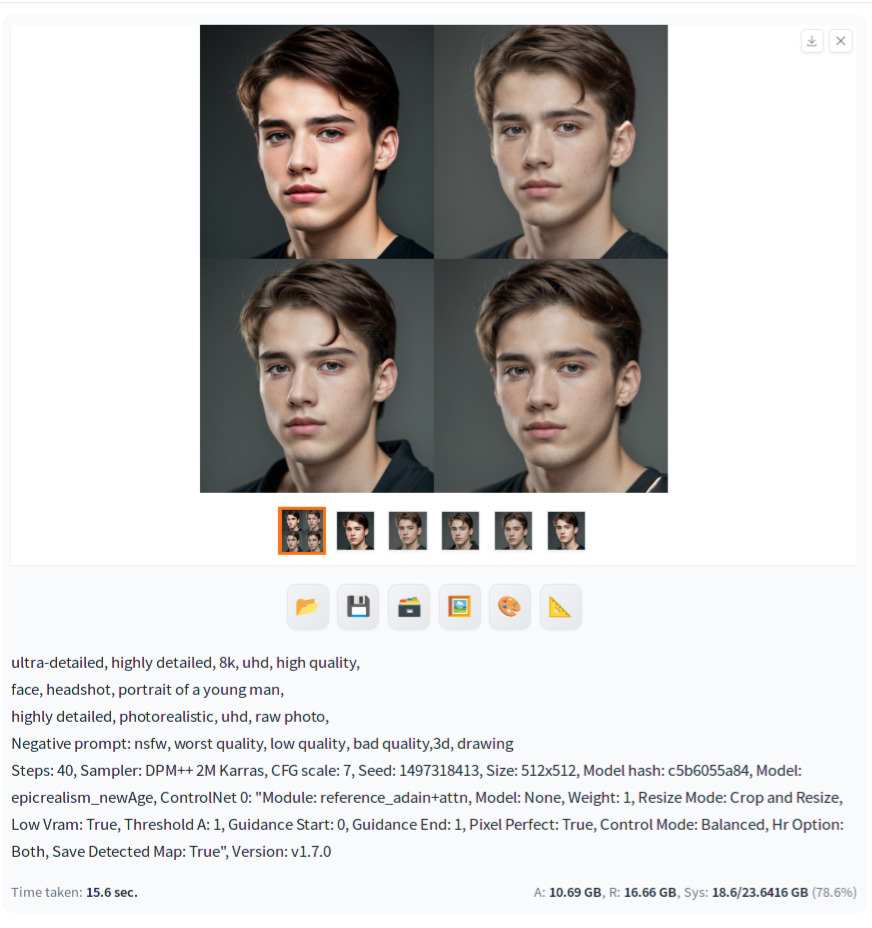

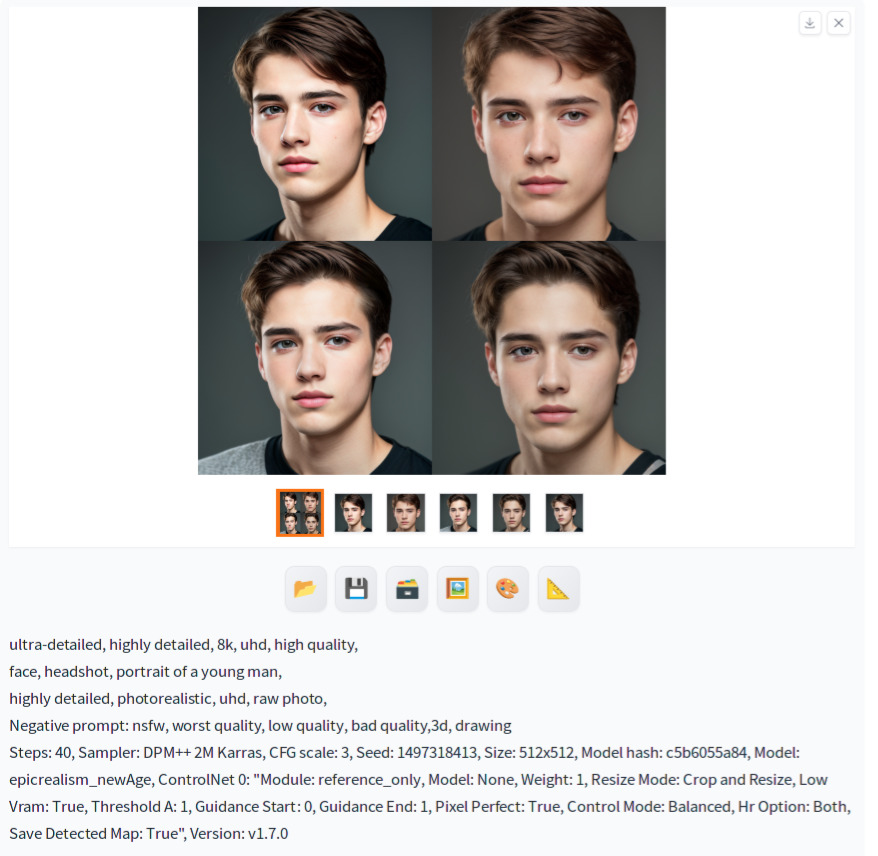

Here are images with Style Fidelity changed to 1 from 0.5:

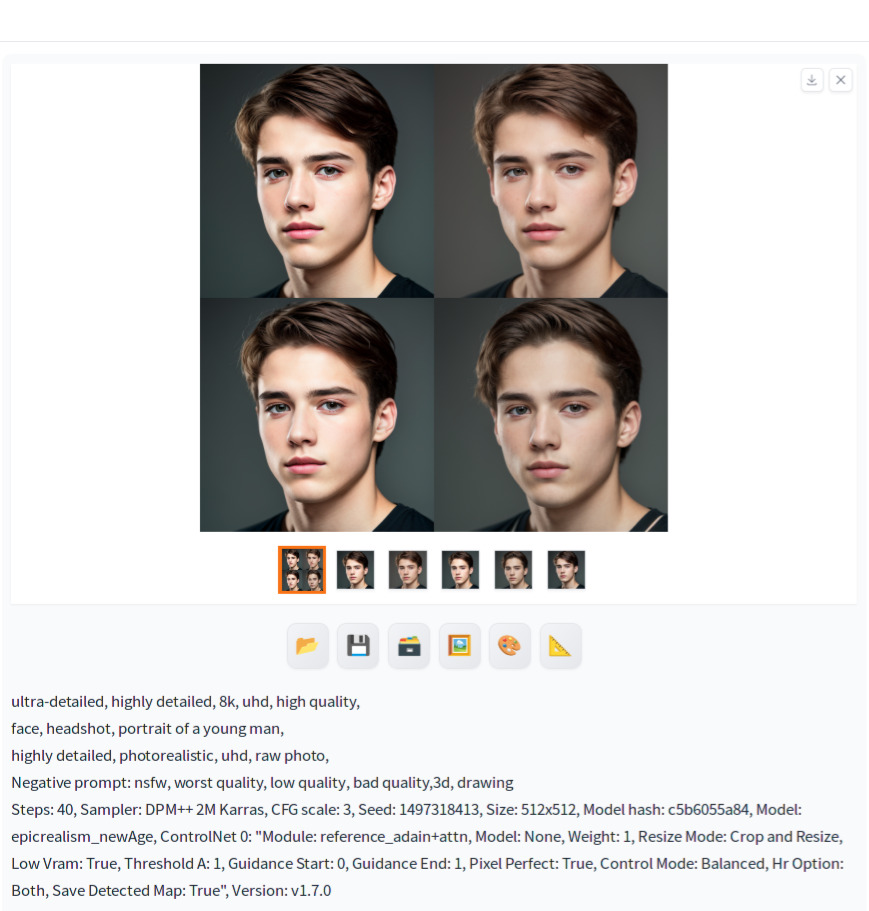

As you can see, some images look washed out. Reducing the CFG scale value can be a solution in this case. If we reduce it to 3, then images look less washed out.

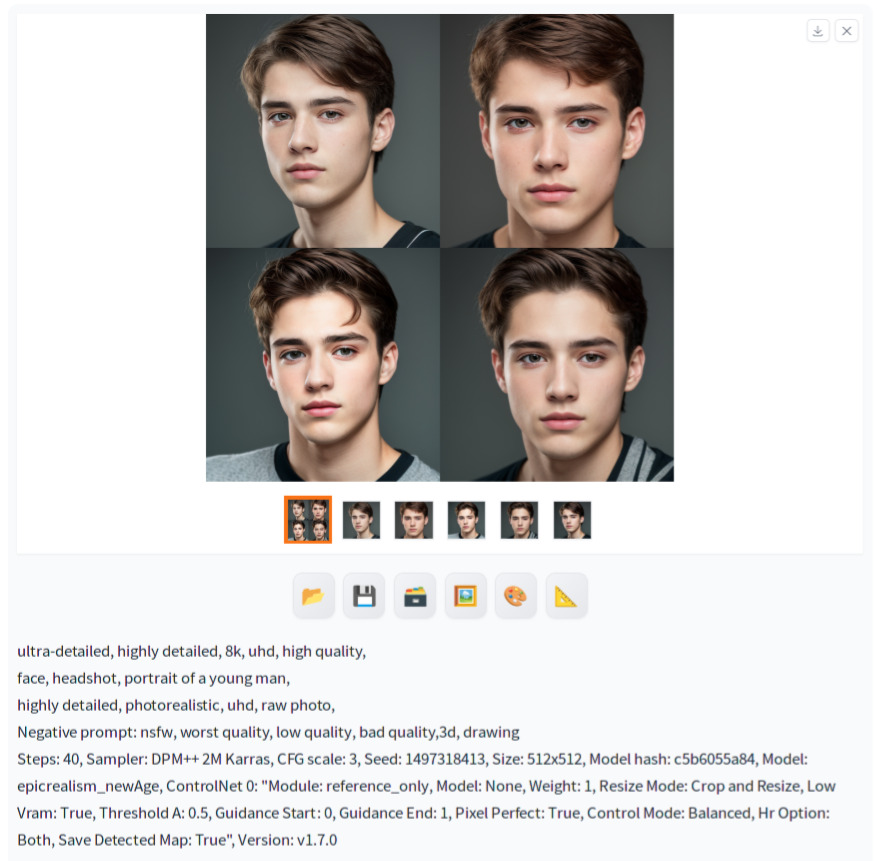

Here are images with Style Fidelity changed to 0.1:

Faces seem to have more variations than Style Fidelity set to 0.5. The creator of ControlNet recommends using reference_only + Style Fidelity=0.5″ as default [1], so you can also use that guidance and tweak based on your need. Note that you may also need to tweak samplers and number of steps.

References

[1] lllyasviel. [New Preprocessor] The “reference_adain” and “reference_adain+attn” are added #1280. Retrieved from https://github.com/Mikubill/sd-webui-controlnet/discussions/1280.

Appendix

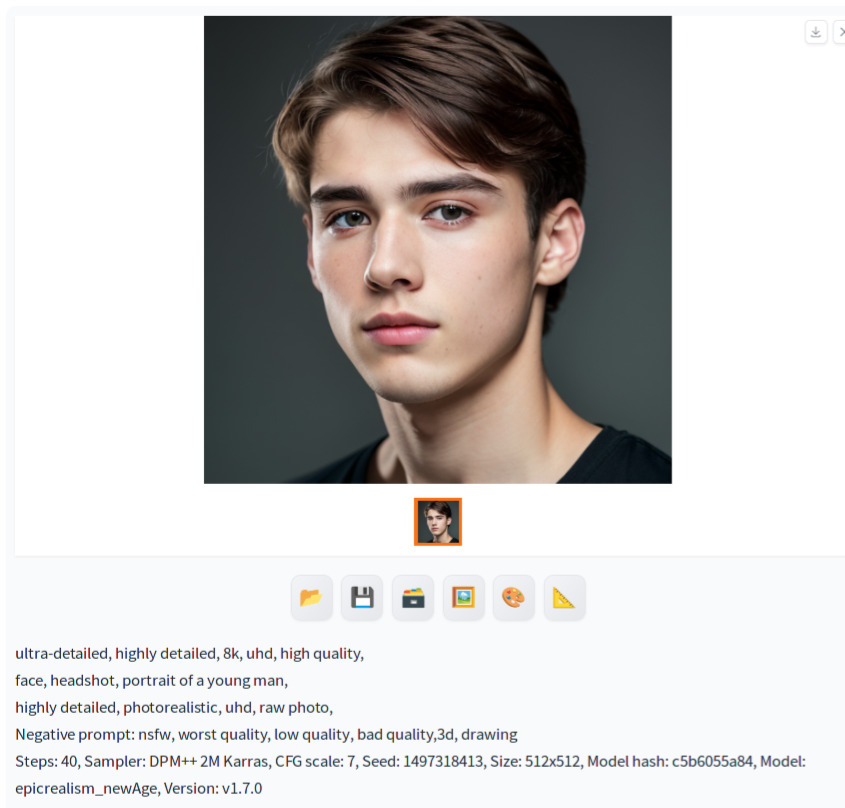

Generation parameters of the reference image

ultra-detailed, highly detailed, 8k, uhd, high quality,

face, headshot, portrait of a young man,

highly detailed, photorealistic, uhd, raw photo,

Negative prompt: nsfw, worst quality, low quality, bad quality,3d, drawing

Steps: 40, Sampler: DPM++ 2M Karras, CFG scale: 7, Seed: 1497318413, Size: 512x512, Model hash: c5b6055a84, Model: epicrealism_newAge, ControlNet 0: "Module: reference_adain+attn, Model: None, Weight: 1, Resize Mode: Crop and Resize, Low Vram: True, Threshold A: 0.1, Guidance Start: 0, Guidance End: 1, Pixel Perfect: True, Control Mode: Balanced, Hr Option: Both, Save Detected Map: True"Example of images generated using different preprocessors

Below are the examples of using different preprocessors (reference_only and Reference_adain) with different style fidelity values.

Reference_only

Style Fidelity = 1 (CFG scale = 7)

Style Fidelity = 1 (CFG scale = 3)

Style Fidelity = 0.5

Style Fidelity = 0.1

Reference_adain

Style Fidelity = 0.5

Style Fidelity = 0.1